There was also a time when most of the universe was at the perfect temperature and density to cook pizza,I guess.

Doing the Lord’s work in the Devil’s basement

There was also a time when most of the universe was at the perfect temperature and density to cook pizza,I guess.

Ok that one is hilarious

Same. I remember playing the original on an Amstrad in the 90s and it was already mind blowing. I was so happy they remade it, and even happier that they barely changed anything about it.

Le ai Bad amirite guize

Arch Linux is a good alternative to Linux and is a good choice for most use cases where you can use it for a variety of tasks and and it is a good fit to Linux and Linux.

Yeah it always strikes me how religious extremism is framed. You rarely hear about christian extremists, who operate in the open on all social networks.

Yet, you could argue that Christian extremists have done more harm to western societies in the last 20 years than any Islamic group.

That’s a nice hypothetical but the facts of this case are much simpler. Would you agree that a country is sovereign, and entitled to write its own laws? Would you agree that a company has to abide by a country’s laws if it wants to operate there? Even an American company? Even if it is owned by a billionaire celebrity?

What is complicated about alchemy is that it’s a tradition that is thousands of years old and it has so many layers it’s hard to make sense of.

Originally you have metallic alchemy, a precursor to chemistry and metallurgy. An insanely valuable corpus of knowledge if you think about ancient times - good metallurgy made good armies which made empires. It was technology so advanced it might as well have been magic. The literature that has survived is very opaque by design, and hard to read because of cultural jetlag, but they are technical texts - tutorials and explainers for the various chemical and alloying operations that were known at the time.

utility outside of use as a metaphor : 10/10 if you’re kind of done with Bronze and want to boost your kingdom into the Iron Age

Then around the Renaissance, when antique stuff started becoming hot again, those texts started buzzing and they were re-interpreted with a generous flavouring of Renaissance spirituality. That’s pretty easy with antique technical texts because they are always written with a lot of religious and astrological terminology. It could be about plumbing and you’d still have Apollo fighting Hades as an allegory of you unclogging a pipe or whatever. So, to a modern mind it made sense to see them as spiritual guidebooks through the transformation and purification of the self. That’s also when they started pumping the gas on the “philosophical stone” ideas of turning mercury into gold, becoming immortal etc… The technical aspects of the texts started fading in the background.

utility outside of use as a metaphor : 0/10 although you’ll get some beautiful, evocative literature out of it. Some seriously trippy stuff if you’re into that sort of things.

Then you have the 19th century onwards where it’s a literal explosion of books and treatises and translations, and it gets even more divorced from the source material, as the academic work gets shoddier and shoddier. At this point the technical aspects are mostly lost on the readers because they make no sense in the context of early-industrial metallurgy and chemistry.

utility outside of use as a metaphor : 0/10, kind of new-agey to my taste. It has a lot of cultural relevance, though. Being well-read in early modern hermeticism is kind of the Rosetta stone of popular culture lol.

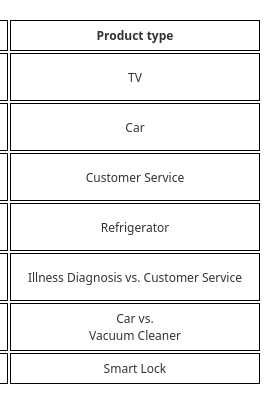

At least acc. to TechSpot, the negative sentiment is general. It’s just more pronounced for some products (high risk and/or price) than others.

That’s not what the study says. I’m no AI-hater but I would sure stay away from an AI car or medical diagnosis. Those products make absolutely no sense.

So even where plopping a LLM or similar would make sense, there’ll be likely strong market resistance.

I work tangentially to the industry (not making models, not making apps based on models, but making tools to help people who do) and that is not what i observed. Just like in every market, products that make sense make fucking bank. It’s mostly boring B2B stuff which doesn’t make headlines but there is some money being made right now, with some very satisfied customers.

The “market resistance” story is anecdotal clickbait.

Eh that one study was mostly about stupid products like “AI coffee machine” or “AI fridge”. AI products that make sense sell pretty well.

Seveneves would be the bomb (eta : they could even do the last part as a separate animated short)

Just blocked politics & news and my quality of life instantly jumped 200%. I highly recommend it. If something significant happens, you’ll read about it in another community.

Even if you were extremely generous and didn’t factor in the scams in your analysis, the reality is that a Blockchain solves problems 99.9% of people will never face. This breaks the whole imagined model, when your product is ultra niche but relies on mass adoption for its security.

Even if you were extremely generous and didn’t factor in the scams in your analysis, the reality is that a Blockchain solves problems 99.9% of people will never face. This breaks the whole imagined model, when your product is ultra niche but relies on mass adoption for its security.

Hey man I’m not saying you’re wrong, but you’re touching on another important thing which is trust. On average, high trust people are just easier to manage, especially when you’re a small outfit. It’s better for everyone if low trust users bounce away because of the cc wall. They’ll come back once the product has some brand recognition or social proof.

Then these models are stupid

Yup that is kind of the point. They are math functions designed to approximate human tasks.

These models should start out with basics of language, so they don’t have to learn it from the ground up. That’s the next step. Right now they’re just well read idiots.

I’m not sure what you’re pointing at here. How they do it right now, simplified, is you have a small model designed to cut text into tokens (“knowledge of syllables”), which are fed into a larger model which turns tokens into semantic information (“knowledge of language”), which is fed to a ridiculously fat model which “accomplishes the task” (“knowledge of things”).

The first two models are small enough that they can be trained on the kind of data you describe, classic books, movie scripts etc… A couple hundred billion words maybe. But the last one requires orders of magnitude more data, in the trillions.

Again, it’s a filter. You give me card details, that means you seriously want the product and also that you trust me. If i treat you right and give you a great experience you’ll be subscribed for years, purchasing add-ons, and recommending me to your friends. That’s much more valuable for me than skimming 20 bucks a month because you forgot to unsub.

When it’s a big corpo sure they’ll do it cause they don’t give a shit about their customers or even their reputation. I’m honestly not saying it doesn’t happen. But when it’s a no-name with a small online product they can’t afford that shit. If they put a credit card wall it’s most likely because they were getting too many people on the free trial, and were having a hard time telling actual future customers from drive-bys. This solves that.

That’s what smaller models do, but it doesn’t yield great performance because there’s only so much stuff available. To get to gpt4 levels you need a lot more data, and to break the next glass ceiling you’ll need even more.

lmao what a nuanced point of view, you seem to have vast personal experience of this kind of things

Then there’s games like the original “pirates!”. It has an anti cheat that would present itself as a simple question like “do you recognize whose pirate flag that is”. The answer is in the booklet, and if you answer wrong nothing visible happens but the difficulty is cranked so high that the game becomes effectively unbeatable.