I’ve seen a lot of sentiment around Lemmy that AI is “useless”. I think this tends to stem from the fact that AI has not delivered on, well, anything the capitalists that push it have promised it would. That is to say, it has failed to meaningfully replace workers with a less expensive solution - AI that actually attempts to replace people’s jobs are incredibly expensive (and environmentally irresponsible) and they simply lie and say it’s not. It’s subsidized by that sweet sweet VC capital so they can keep the lie up. And I say attempt because AI is truly horrible at actually replacing people. It’s going to make mistakes and while everybody’s been trying real hard to make it less wrong, it’s just never gonna be “smart” enough to not have a human reviewing its’ behavior. Then you’ve got AI being shoehorned into every little thing that really, REALLY doesn’t need it. I’d say that AI is useless.

But AIs have been very useful to me. For one thing, they’re much better at googling than I am. They save me time by summarizing articles to just give me the broad strokes, and I can decide whether I want to go into the details from there. They’re also good idea generators - I’ve used them in creative writing just to explore things like “how might this story go?” or “what are interesting ways to describe this?”. I never really use what comes out of them verbatim - whether image or text - but it’s a good way to explore and seeing things expressed in ways you never would’ve thought of (and also the juxtaposition of seeing it next to very obvious expressions) tends to push your mind into new directions.

Lastly, I don’t know if it’s just because there’s an abundance of Japanese language learning content online, but GPT 4o has been incredibly useful in learning Japanese. I can ask it things like “how would a native speaker express X?” And it would give me some good answers that even my Japanese teacher agreed with. It can also give some incredibly accurate breakdowns of grammar. I’ve tried with less popular languages like Filipino and it just isn’t the same, but as far as Japanese goes it’s like having a tutor on standby 24/7. In fact, that’s exactly how I’ve been using it - I have it grade my own translations and give feedback on what could’ve been said more naturally.

All this to say, AI when used as a tool, rather than a dystopic stand-in for a human, can be a very useful one. So, what are some use cases you guys have where AI actually is pretty useful?

It’s perfect for topics you have professional knowledge of but don’t have perfect recall for. It can bring forward the context you need to be refreshed on but you can fact check it because you are an expert in that field.

If you need boilerplate code for a project but don’t remember a specific library or built in function that tackles your problem, you can use AI to generate an example you can then fix to make it run the way you wanted.

Same thing with finding config examples for a program that isn’t well documented but you are familiar with.

Sorry all my examples are tech nerd stuff because I’m just another tech nerd on lemmy

On the inverse I’ve found it to be quite bad at that. I can generally count on the AI answer to be wrong, fundamentally.

Might depend on your industry. It’s garbage at g code.

It probably depends how many good examples it has to pull together from stack overflow etc. it’s usually fine writing python, JavaScript, or powershell but I’d say if you have any level of specific needs it will just hallucinate a fake module or library that is a couple words from your prompt put into a function name but it’s usually good enough for me to get started to either write my own code or gives me enough context that I can google what the actual module is and find some real documentation. Useful to subject matter experts if there is enough training data would be my new qualifier.

It is sometimes good at building SQL code examples, but almost always needs fine-tuning since it doesn’t know the schema specifics.

Having said that one time it gave me code that resulted in an error, then I went back to GPT and said “This code you gave me is giving this error, can you fix it?” and all it would do is say something like “Correct, that code is wrong and will give an error.”

AI is really good as a starting point for literally any document, report, or email that you have to write. Put in as detailed of a prompt as you can, describing content, style, and length and cut out 2/3 or more of your work. You’ll need to edit it - somewhat heavily, probably - but it gives you the structure and baseline.

This is my one of 2 use cases for AI. I only recently found out after a life of being told I’m terrible at writing, that I’m actually really good at technical writing. Things like guides, manuals, etc that are quite literal and don’t have any soul or personality. This means I’m awful at writing things directed at people like emails and such. So AI gives me a platform where I can enter in exactly what I want to say and tell it to rewrite it in a specific tone or level of professionalism and it works pretty great. I usually have to edit what it gave me so it flows better or remove inaccurate language, but my emails sound so much better now! It’s also helped me put more personality into my resume and portfolio. So who knows, maybe it’ll help me get a better job?

I’ve been learning docker over the last few weeks and it’s been very helpful for writing and debugging docker-compose configs. My server how has 9 different services running on it.

I use it for python development sometimes, maybe once per day. I’ll paste in a chunk of code and describe how I want it altered or fixed and that usually goes pretty well. Or if I need a generic function that I know will have been coded a million times before I’ll just ask ChatGPT for it.

It’s far from “useless” and has made me somewhat more productive. I can’t see it replacing anyone’s job though, more of a supplemental tool that increases output.

I’ve definitely run into this as well in my own self-hosting journey. When you’re learning it’s easier to get it to just draft up a config - then learn what the options mean after the fact then it is to RTFM from the beginning.

If you already kinda know programming and are learning a new language or framework it can be useful. You can ask it “Give me an if statement in Tcl” or whatever and it will spit something out you can paste in and see if it works.

But remember that AI are like the fae: Do not trust them, and do not eat anything offered to you.

AI isn’t useless, but it’s current forms are just rebranded algorithms with every company racing to get there’s out there. AI is a buzzword for tools that were never supposed to be labeled AI. Google has been doing summary excerpts for like a decade. People blindly trusted it and always said “Google told me”. I’d consider myself an expert on one particular car and can’t tell you how often those “answers” were straight up wrong or completely irrelevant to one type of car (hint, Lincoln LS does not have a blend door so heat problems can’t be caused by a faulty blend door).

You cite Google searches and summarization as it’s strong points. The problem is, if you don’t know anything about the topic or not enough, you’ll never know when it makes mistakes. When it comes to Wikipedia, journal articles, forum posts, or classes, mistakes at possible there too. However, those get reviewed as they inform by knowledgeable people. Your AI results don’t get that review. Your AI results are pretending to be master of the universe so their range of results is impossibly large. That then goes on to be taken is pure fact by a typical user. Sure, AI is a tool that can educate, but there’s enough it proves it gets wrong that I’d call it a net neutral change to our collective knowledge. Just because it gives an answer confidently doesn’t mean it’s correct. It has a knack for missing context from more opinionated sources and reports the exact opposite of what is true. Yes, it’s evolving, but keep in mind one of the meta tech companies put out an AI that recommended using Elmer’s glue to hold cheese to pizza and claimed cockroaches live in penises. ChatGPT had it’s halluconatory days too, it just got forgotten due to Bard’s flop and Cortana’s unwelcome presence.

Use the other two comments currently here as an example. Ask it to make some code for you. See if it runs. Do you know how to code? If not, you’ll have no idea if the code works correctly. You don’t know where it sourced it from, you don’t know what it was trying to do. If you can’t verify it yourself, how can you trust it to be accurate?

The biggest gripe for me is that it doesn’t understand what it’s looking at. It doesn’t understand anything. It regurgitates some pattern of words it saw a few times. It chops up your input and tries to match it to some other group of words. It bundles it up with some generic, human-friendly language and tricks the average user into believing it’s sentient. It’s not intelligent, just artificial.

So what’s the use? If it was specifically trained for certain tasks, it’d probably do fine. That’s what we really already had with algorithmic functions and machine learning via statistics, though, right? But sparsing the entire internet in a few seconds? Not a chance.

I would generally say they’re great with anything you happy being 100% right 90% of the time.

Troublehsooting technology.

I’ve been using Linux as my daily driver for a year and a half and my learning is going a lot quicker thanks to AI. It’s so much easier to ask a question and get an answer instead of searching through stack overflow for 30 minutes.

That isn’t to say that the LLM never gives terrible advice. In fact, two weeks ago, I was digging through my logs for a potential intruder (false alarm) and the LLM gave me instructions that ended up deleting journal logs completely.

The good far outweighs the bad for sure tho.

The Linux community specifically has an anti-AI tilt that is embarrassing at times. LLMs are amazing, and much like random strangers on the internet, you don’t blindly trust/follow everything they say, and you’ll be just find.

The best way I think of AI is that it’s going through a bubble not unlike the early days of the internet. There was a lot of overvalued companies and scams, but it still ushered in a new era.

Another analogy that comes to mind is how people didn’t trust wikipedia 20 years ago because anyone could edit it, and now it is one of the most trusted sources for information out there. AI will never be as ‘dumb’ as it is today, which is ironic because a lot of the perspective I see on AI was formed around free models from 2023.

I’ve done several AI/ ML projects at nation/ state/ landscape scale. I work mostly on issues that can be solved or at least, goals that can be worked towards using computer vision questions, but I also do all kinds of other ml stuff.

So one example is a project I did for this group: https://www.swfwmd.state.fl.us/resources/data-maps

Southwest Florida water management district (aka “Swiftmud”). They had been doing manual updates to a land-cover/ land use map, and wanted something more consistent, automated, and faster. Several thousands of square miles under their management, and they needed annual updates regarding how land was being used/ what cover type or condition it was in. I developed a hybrid approach using random forest, super-pixels, and UNET’s to look for regions of likely change, and then to try and identify the “to” and “from” classes of change. I’m pretty sure my data products and methods are still in use largely as I developed them. I built those out right on the back of UNET’s becoming the backbone of modern image analysis (think early 2016), which is why we still had some RF in there (dating myself).

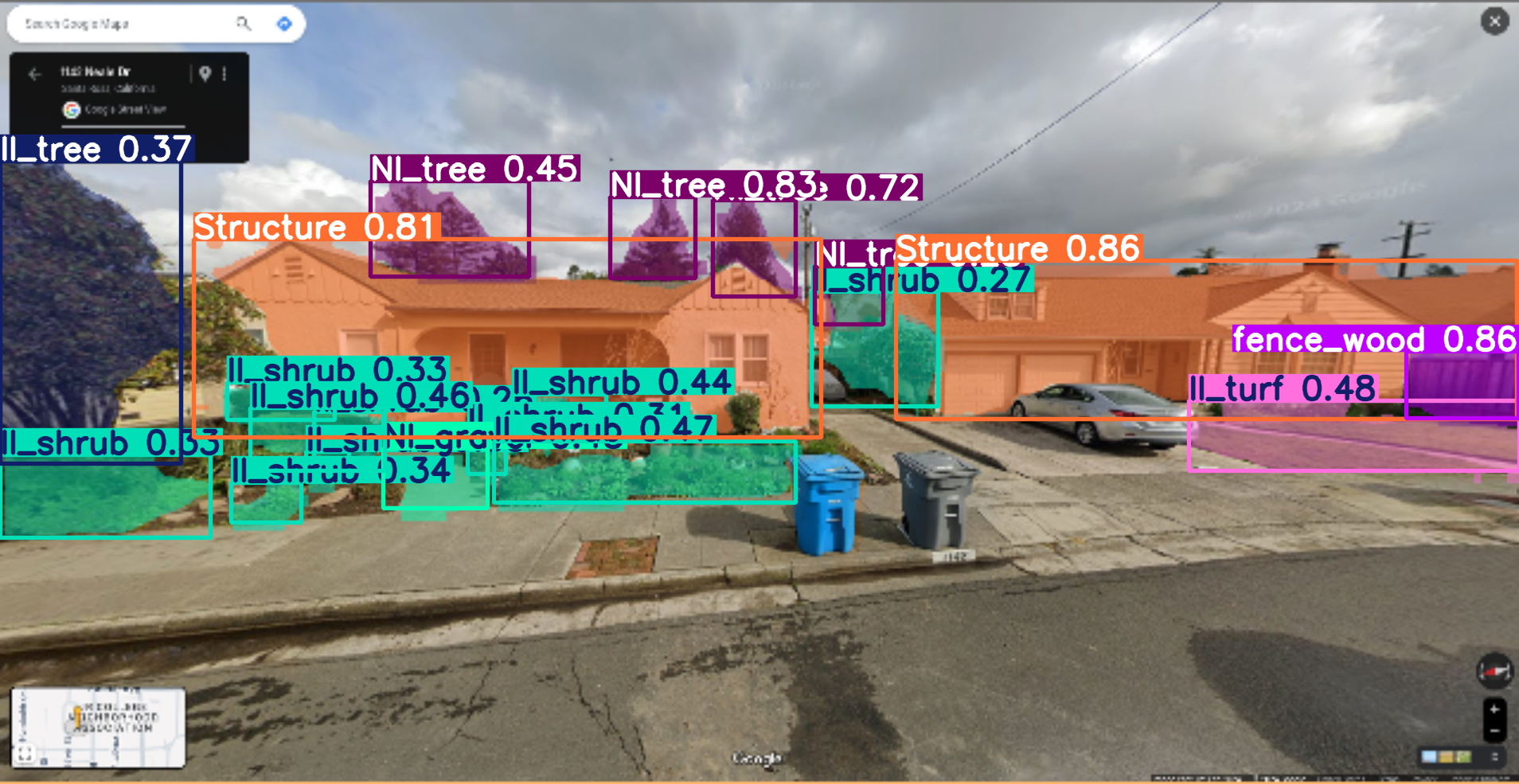

Another project I did was for State of California. I developed both the computer vision and statistical approaches for estimating outdoor water use for almost all residential properties in the state. These numbers I think are still in-use today (in-fact I know they are), and haven’t been updated since I developed them. That project was at a 1sq foot pixel resolution and was just about wall-to-wall mapping for the entire state, effectively putting down an estimate for every single scrap of turf grass in the state, and if California was going to allocate water budget for you or not. So if you got a nasty-gram from the water company about irrigation, my bad.

These days I work on a small team focused on identifying features relevant for wildfire risk. I’m trying to see if I can put together a short video of what I’m working on right now as i post this.

Example, fresh of the presses for some random house in California:

I sometimes have Señor GPT rewrite my nonsensical ramblings into coherent and decipherable text. I recently did that for a paper in my last class. lol

I wrote a bunch of shit, had GPT rewrite it, added a couple quotes from my sources and called it a day.I’m also currently on a single player, open world adventure with GPT. Myself and the townspeople iust confronted the suspicious characters on the edge of town. They claim to not be baddies but they’re being super sus. I might just attack anyway.

I’ve learned more C/C++ programming from the GitHub Copilot plugin than I ever did in my entire 42 year life. I’m not a professional, though, just a hobbyist. I used to struggle through PHP and other languages back in the day but after a year of Copilot I’m now leveraging templates and the C++ STL with ease and feelin’ like a wizard.

Hell maybe I’ll even try Rust.

Customer support tier .5

It can be hella great for finding what you need on a big website that is poorly organized, laid out, or just enormous in content. I could see it being incredible for things like irs.gov, your healthcare providers website, etc. in getting the requested content in user hands without them having to familiarize themselves with constantly changing layouts, pages, branding, etc.

To go back to the IRS example, there are websites in the last 5 years that started to have better content library search functionality, but I guess for me having AI able to contextualize the request and then get you what you want specifically would be incredible. “Tax rule for x kind of business in y situation for 2024”—that shit takes hours if you’re pretty competent sometimes, and current websites might just say “here is the 2024 tax code PLOP” or “here is an answer that doesn’t apply to your situation” etc. “tomato growing tips for zone 3a during drought” on a gardening site, etc.

I’m in HR so benefits are a big one…the absolute mountain of content, even if you understand it, even experts can’t have perfect recall and quick, easy answers through a mountain of text seems like an area AI could deliver real value.

That said, companies using AI as an excuse to them eliminate support jobs because customers “have AI” are greedy dipshits as AI and LLMs are a risk at best and outside of a narrow library and intense testing are going to always be more work for the company as you not only have to fix the wrong answer situations but also get the right answer the old fashioned way. You still need humans and hopefully AI can make their work more interesting, nuanced and fulfilling.

Entertainment.

I needed a simple script to combine jpegs into a pdf. I tried to make a python script but it’s been years since I’ve programmed anything and I was intermediate at best. My script was riddled with errors and would not run. I asked chatgpt to write me the script and the second or third attempt worked great. The first two only failed because my prompts were bad, I had never used chatgpt before.

I haven’t heard any other comments chime in from one of my use cases, so I’ll give it a stab. My first use case, I mentioned in another comment which is just adding a specific tone onto emails which I’m bad at doing myself. But my second use case is more controversial and I still don’t know how to feel about it. I’m a graphic designer and with most enhancements in design/art technology, if you don’t learn what’s new, you will fall behind and your usefulness will wane. I’ve always been very tech savvy and positive about most new tech so I like to stay up to speed both for my job and self interest. So how do I use AI for graphic design? The things I think have the best use case and are least controversial are the AI tools that help you edit photos. In the past, I have spent loads of time editing frizzy curly hair so I can cut out a person. As of a couple years ago, Adobe I touched some tools to make that process easier, and it worked ok but it wasn’t a massive time saver. Then they launched the AI assisted version and holy shit it works perfectly every time. Like give me the frizziest hair on a similar color background with texture and it will give you the perfect cutout in a minute tops. That’s the kind of shit I want for AI. More tools eliminate tedious processes!! However there is another more controversial use case which is generative AI. I’ve played with it a lot and the tools work fantastic and get you started with images you can splice together to make what you really envisioned or you can use it to do simple things like seamlessly remove objects or add in a background that didn’t exist. I once made a design with an illustrative style by inputting loads of images that fit the part, then vectorizing all the generated options and using pieces from those options to make what I really wanted. I was really proud of it especially since I’m not an illustrator and don’t have the skills to illustrate what I envisioned by hand. But that’s where things get controversial. I had to input the work of other people to achieve this. At the moment, I can’t use anything generative commercially even though Adobe is very nonchalant about it. My company has taken a firm stance on it which is nice, but it means I can really only use that aspect for fun even though it would be very useful in some situations.

TLDR: I use AI to give my writing style the right tone, to save loads of time editing photos, and to create images I don’t have the skills to create by hand (only for funzies).

In Premiere it’s great to generate captions. But I’m cautious since it:

- depends on their servers - they upload your stuff, manipulate it and bring it back;

- therefore it is 100% aligned with subscription model that is hell they practice for more than a dozen of years now;

- makes you always online and always on the latest version to keep being competitive, even if you dislike certain changes they introduced.

In a sense, it’s the missing brick in their DRM wall that ties it all together. Not their content stocks, nor their cloud stuff felt that natural of an obstacle. And while it’s small now, I think they’d only make the difference between (allegedly) pirates and their always online customers bigger. Like, the next thing they’d gonna do is make healing brushes in every editor a server-only tool scrapping the pretty great local version they have now.

What sucks is if there was no commercial part here - i.e. like how you’re doing it just for fun, or if we lived in a magical world where we all just agreed that creative works were the shared output of humanity as a whole - then there would be no problem, we’d all be free to just use what we need to make new things however we want. But there is a commercial part to it, somebody is trying to gain using the collective work of others, and that makes it unethical.