You must log in or # to comment.

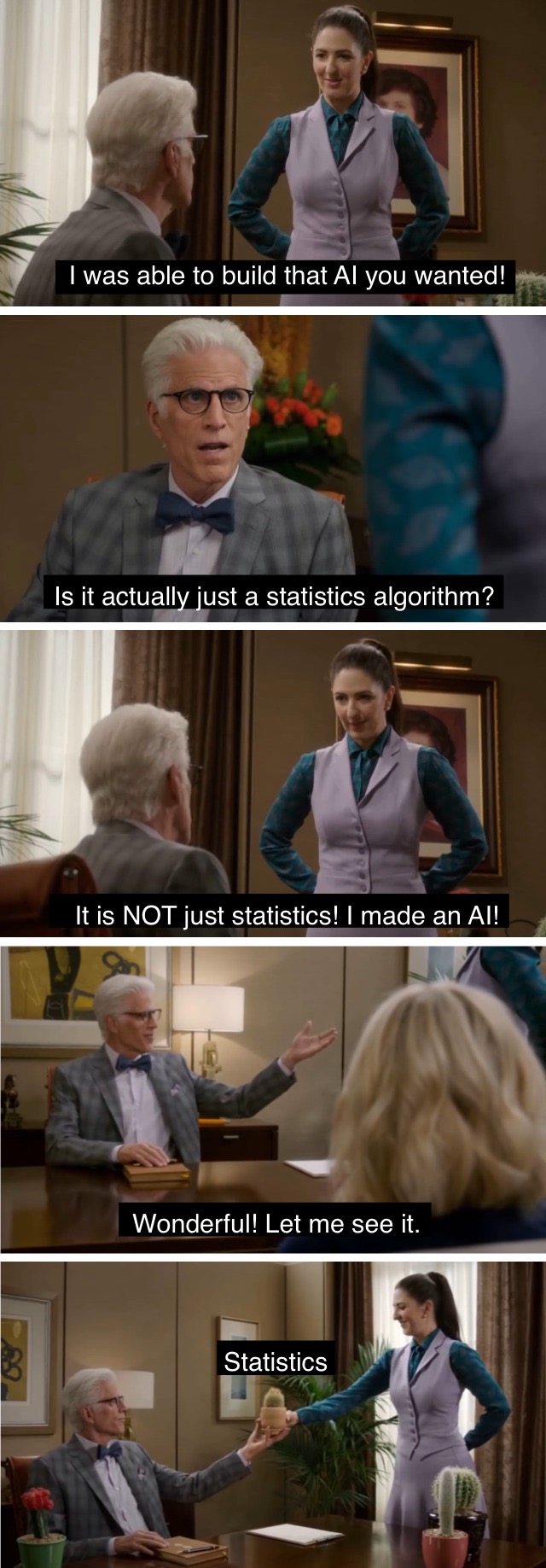

Is there a non-AI enhanced one that would be less prone to random hallucinations?

Non-AI options can also have “hallucinations” i.e. false positives and false negatives, so if the AI one has a lower false positive/false negative rate, I’m all for it.

Non-AI options are comprehensible so we can understand why they’re failing. When it comes to AI systems we usually can’t reason about why or when they’ll fail.

Are hallucinations a problem outside of LLMs?

I wouldn’t trust a program that can’t accurately count fingers to detect diseases.

But that’s just me.