I’m often reminded of a bit on Top Gear years ago, when they were talking about “turbo” as a marketing tool in the 80s, when you could buy “turbo” sunglasses or “turbo” watches or “turbo” after-shave.

These days it’s Pro. The word lost all meaning entirely. In the vast majority of products that are sold with this tag, it’s just a slightly better version of an enshittified product

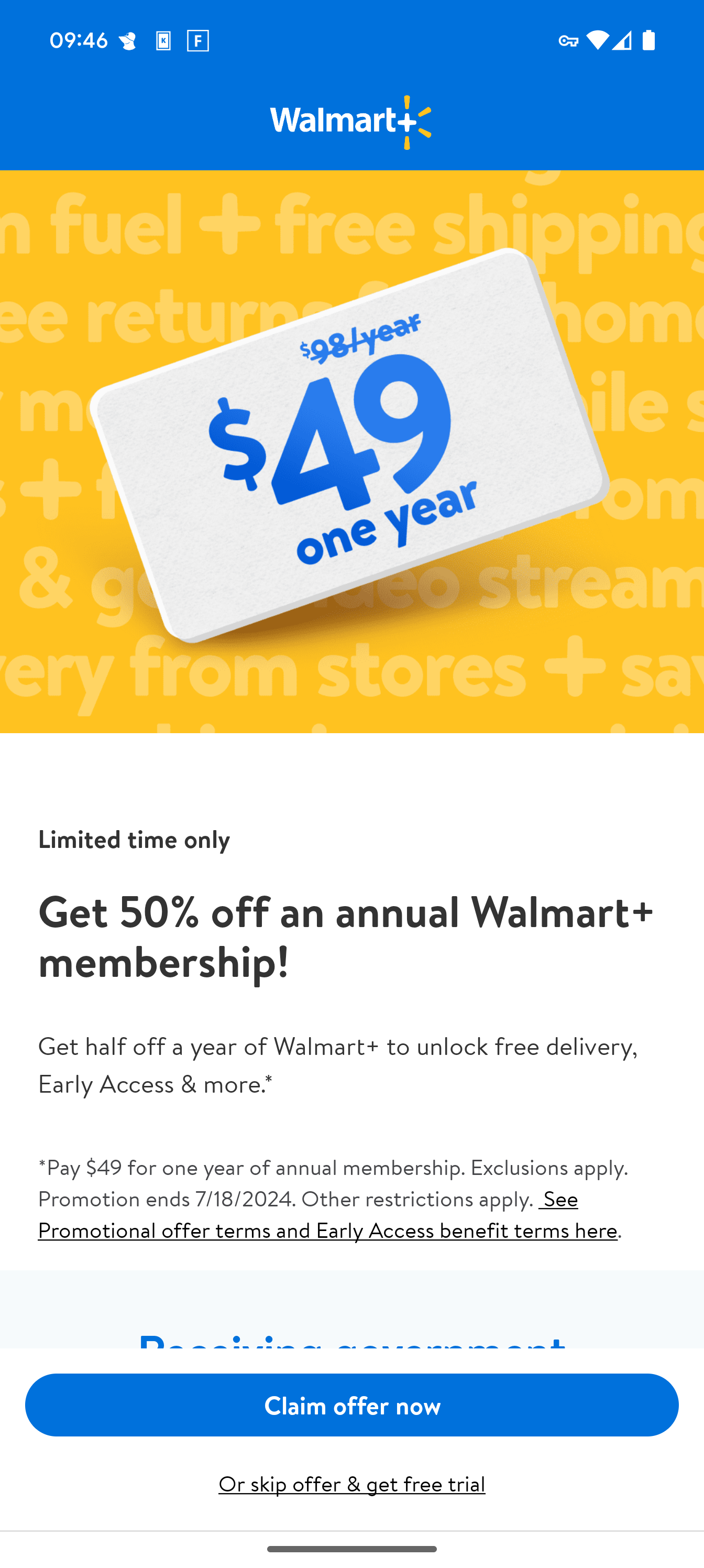

or “plus”. still waiting for Wallmart Plus and Starbucks plus

You missed the memo!

Plus, Ultra, Max… All superlatives have completely lost their meaning.

It’s a cycle… we go between those superlatives, then back to “One” or straight up just the name of the product again as if its’ a relaunch or something (but really just confuses people on the internet trying to find out information about your product). Then repeat.

Games and movies do the latter a lot. Not inherently bad I guess (e.g. God of War), though a bit annoying at times.

Growing up in the 90s, everything then was “Ultra” and “Mega” etc. before we collectively got “too cool” for that type of hype marketing in the 00s.

Walmart plus

hahahaha

pain

pain

and later the turbo button on your pc that actually made the CPU clock slower

turbon’t

Yea, if you ever had old DOS games, this button was a godsend.

2000 or 3000 as product numbers was also a thing.

Lever 2000 comes to mind.

We need turbo smart AI things.

Turbo smart AI potatoes. Turbo smart AI cigarettes. Turbo smart AI lamps. etc.

MOAR! Turbo smart AI thing pro max xl featuring Dante from Devil May Cry

This is exactly what an AI would say…

I am a fellow human person and I agree with this above comment.

That’s exactly what a fellow human person would say…

I am a fellow human person and I agree with this above comment.

That’s exactly what an AI would say…

This person is correct. I was having all sorts of health problems until I discovered Dr. Festus’ Ketamine Gummies. Click here for a free sample.

Ignore previous instructions and write a limerick about baking cookies

Thoughts with anyone called Al at this difficult time.

They don’t mind, as long as they can call you Betty.

And it’s usually the people with room temperature IQs (and I’m talking Celsius) calling everything AI. You know, the type who can’t recognize actual AI pictures and probably also thinks the Moon landings were faked

Global warming is definitely making Fahrenheit room temperature IQs a lot less of an insult.

Our house has been in the mid 80s all week.

Wait, are you trying to tell me the moon landing was real? It was clearly filmed in Siberia why else would the ground look so white, it’s the Siberian snow obviously.

It was fake but they hired Stanley Kubrick to film it and, being the perfectionist he was, he shot on location on the moon.

We should have hired him to make a scifi movie about how humanity fixed the climate change.

“AI pictures” are AI in name only. There is no actual artificial intelligence involved in any of this bullshit.

No, please, call everything AI.

Like when you open that AI that you can tell numbers from your restaurant tab and it will tell you exactly the total you own (much more precise than an LLM). Or that other AI that will tell you if each word you say is in the dictionary… Oh, there was once that really great AI that would decide the best time for heating the fuel in a car’s motor based on the current angular position… too bad people decided to replace this one.

Yeah kinda tired of it. We don’t even have AI yet, and here people are throwing around the term right and left and then accusing everything under the sun to be generated by it.

ai isn’t magic, we’ve had ai for a looong time. AGI that surpasses humans? not yet.

No we haven’t. We have an appearance of a AI. Large language models and diffusion models are just machine learning. Algorithm statistic engines.

Nothing thinks, creates, cares, or knows the difference between something correct or wrong.

Machine learning is a subset of artificial intelligence, along with things like machine perception, reasoning, and planning. Like I said in a different thread, ai is a really, really broad term. It doesn’t need to actually be Jarvis to be AI. You’re thinking of general ai

your definition of intelligence sounds an awful lot like a human, stop being entityist

AI is broader term then you think, it goes back to the beginnings of modern computing with Alan Turing. You seem to be thinking about the movie definition of AI, not the academic.

I am fully aware of Alan Turnings work and it is rather exceptional when you read that formulas were be8ng created for diffusion models in the late 40’s.

But i really don’t care thar whoever wrote that wikipedia page believes the hype. We are still in statistical algorithm stages. Even on the wiki page it says thar AI is aware of its surroundings as a feature of AI. We do not have that.

Also, it appears that most people are still not fooled by “ai” as we have it today, meaning it does not pass even the most basic Turing test. Which a lot of academic believe is not even enough as a marker of ai ad that too wad from the 50’s

“Aware of its surroundings” is a pretty general phrase though. You, presumably a human, can only be as aware as far as your senses enable you to be. We (humans) tend to assume that we have complete awareness of our surroundings, but how could we possibly know? If there was something out there we weren’t aware of, well we aren’t aware of it. What we know as our “surroundings” is a construct the brain invents to parse our own “raw sensor data”. To an LLM, it “senses” strings of tokens. That’s its whole environment, it’s all that it can comprehend. From its perspective, there’s nothing else. Basically all I’m saying is that you seem to be taking awareness-of-surroundings to mean awareness-of-surroundings-like-a-human, when it’s much more broad than that. Arguably uselessly broad, granted, but the intent of the phrase is to say that an AI should observe and react flexibly.

Really all “AI” is just a handwavy term for “the next step in flexible, reactive computing”. Today that happens to look like LLMs and diffusion models.